Detecting Lane Lines

At the self-driving car engineer “nanodegree” on Udacity, our first project was to write a pipeline that can be used to find lane lines on an input video stream.

Processing a video sounds daunting but it’s really just a series of images that we have to deal with. And since we are given the right tools to manipuate those images, it only takes some patience and tinkering (and perhaps a sleepless night). The process is documented in a Jupyter notebook, and it included roughly the following steps:

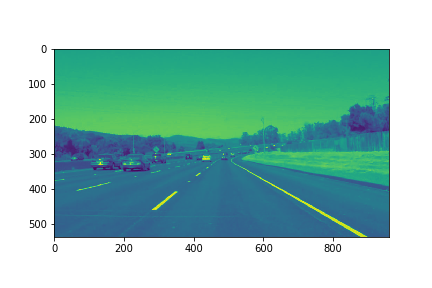

- creating a grayscale version of the image with only one color channel:

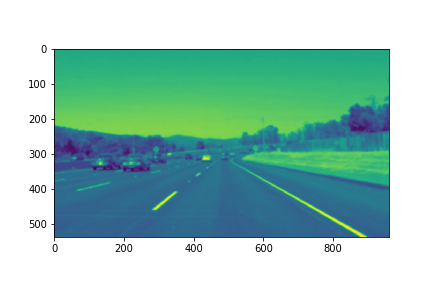

- applying a Gaussian blur - this is done to reduce noise (Wikipedia article):

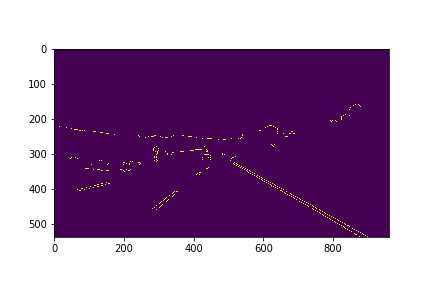

- using Canny edge detection (Wikipedia article):

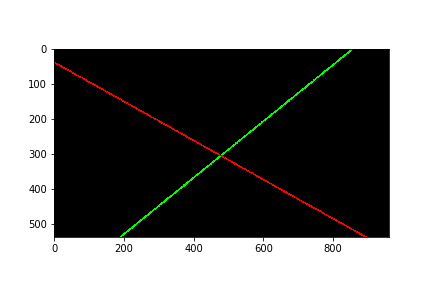

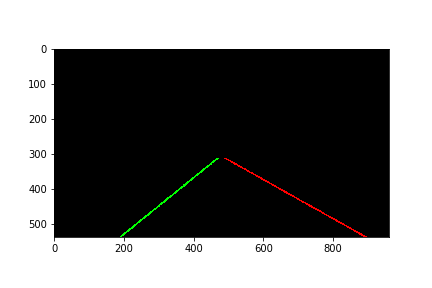

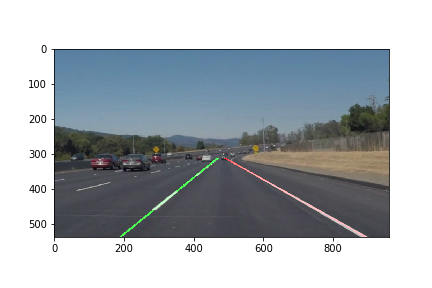

- applying a Hough transform to draw lines. This uses the custom

draw_lines()function, which, given a set of line segments by the Hough transformation, tries to draw the two lane lines on the image (extrapolated lines). It uses slope validation, then decides whether a line segment is part of the left or right line, averages the coordinates of the segments to have a single line, and uses a cache to fall back on if there is not enough data on the current image.

- applying a mask on the image (we only want to draw lines on the road):

- putting the lines on the original image:

This is how it looks on two simpler video inputs:

And on a more difficult one:

Written on October 28, 2017

If you notice anything wrong with this post (factual error, rude tone, bad grammar, typo, etc.), and you feel like giving feedback, please do so by contacting me at samubalogh@gmail.com. Thank you!